How can we apply the principles of musical expression to create more expressive game controller input?

What if the aspects of music are directly mapped to the input used to control a game, together with the resulting behaviour from the game itself? Aspects of music1 are:

- Pitch

- Dynamics

- Rhythm

- Articulation

- Timbre

- Order

Pitch is the frequency of a musical tone, Dynamics refers to the volume and/or velocity of a certain note, Rhythm is a regularity of notes to indicate a pattern to follow, Articulation is the intensity and speed/duration of the variations with which this tone is played, Timbre is the specific sound quality of the instrument of a certain note and Order is the composition of notes.

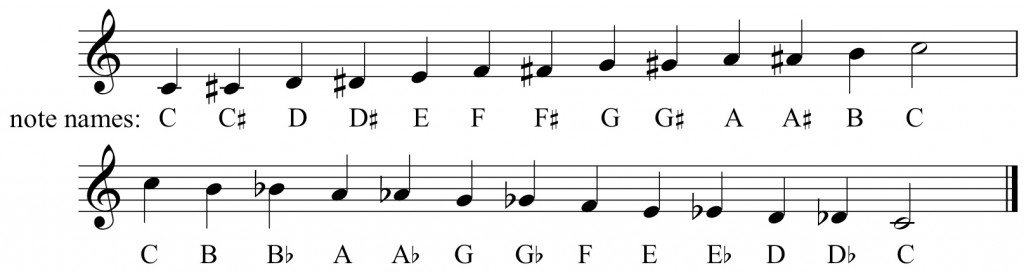

Pitch is a one-dimensional axis, and can both be seen as a ‘stepped’ axis (notes like A, Bb and C#) or as an analog axis (all the tones between A and A# for example).

This could be mapped to the different available buttons (A button for A pitch, B for A# etc).

Dynamics refers to the volume and velocity of notes, so it seems almost natural to map this to pressure of the input device. Dynamics could make use of the pressure sensitivity of a certain button, where the player can make variations, like starting with a firm press, or smoothly transitioning from unpressed to pressed.

Articulation is a part of the broader term dynamics, but focuses more on accent (putting emphasis on particular notes) and the intensity of playing particular notes. This website 3 gives a good overview of a lot of the different articulations a music player can use while playing notes.

Timbre concerns the sound (the ‘final’ output) of the instrument, for example: a C note played by a violin sounds different than the exact same C note on a piano. So Timbre could map to the output of the game; the audiovisual feedback. Like one musical synthesizer that can produce different kinds of sounds (like simulated pianos, harpsichords, and even drums), the input of the game controller (like Sony Playstation’s DualShock controller or Nintendo’s WiiMote) itself does not change with every game, the buttons stay in the same place, but it’s their interpretation by the game (to which I’m making the analogy to Timbre) that changes.

Part 2 of this article wil focus more on answering the question:

How could all these mappings result in more player expression in games?

- Aspects of Music, Wikipedia ↩

- Tremolo, Wikipedia ↩

- Music Theory, Phrasing & Articulation, Wikipedia ↩